Here and there, we hear a lot about artificial intelligence (AI) and machine learning (ML) models, but building a high-performing model is only half the battle. The real challenge lies in Enterprise ML Model Deployment with Kubernetes, ensuring that these models operate seamlessly in production environments where they can deliver value to end-users.

Enter Kubernetes, the open-source container orchestration platform that has become the de facto standard for deploying and managing applications at scale. In this blog, we’ll explore how Kubernetes can be leveraged to deploy machine learning models in production, the benefits it offers, and best practices to ensure a smooth and Secure ML Model Deployment in Production.

Why Kubernetes for Machine Learning?

Machine learning models, unlike traditional software, have unique requirements when it comes to deployment. They often require specific dependencies, libraries, and frameworks, and their performance can vary significantly based on the underlying infrastructure.

Kubernetes addresses these challenges by providing a robust platform for managing containerized applications. Here’s why Kubernetes is an excellent choice for cloud-native machine learning deployment:

1. Scalability: ML models often need to handle varying workloads, especially in real-time applications. Kubernetes allows you to scale your deployments up or down automatically based on demand, ensuring optimal resource utilization.

2. Portability: Kubernetes abstracts away the underlying infrastructure, making it easier to deploy ML models across different environments, whether it’s on-premises, in the cloud, or in hybrid setups.

3. Resource Management: ML models can be resource-intensive, requiring significant CPU, GPU, or memory. Kubernetes enables efficient resource allocation and management, ensuring that your models run smoothly without overloading the system.

4. Fault Tolerance: Kubernetes provides self-healing capabilities, automatically restarting failed containers and ensuring high availability for your ML applications.

5. Versioning and Rollbacks: Deploying ML models often involves frequent updates and experimentation. Kubernetes supports versioning and rollbacks, allowing you to easily revert to a previous version if something goes wrong.

The Role of DevSecOps for ML Models on Kubernetes

As machine learning becomes increasingly integrated into business-critical operations, ensuring the security of ML models is paramount. DevSecOps for ML models on Kubernetes plays a vital role in ensuring that security is seamlessly integrated into the entire ML lifecycle from model development to deployment and monitoring.

DevSecOps involves embedding security practices into the DevOps pipeline to proactively address security vulnerabilities at every stage of development, testing, and deployment. For ML models, this approach is particularly important, as these models can contain sensitive data and proprietary algorithms that need to be safeguarded.

Key Steps to Deploy ML Models with Kubernetes

Successful Enterprise ML Model Deployment with Kubernetes involves several key steps, from containerizing the model to managing the deployment in a production environment. Let’s break down the process:

1. Containerize the ML Model

The first step is to package your ML model and its dependencies into a container. Docker is the most commonly used tool for this purpose. Create a Dockerfile that specifies the base image, installs the necessary libraries, and includes the model artifacts. Once the Dockerfile is ready, build the container image and push it to a container registry like Docker Hub or a private registry.

# Example Dockerfile for an ML model

FROM python:3.8-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD [“python”, “app.py”]

2. Define Kubernetes Resources

Next, define the Kubernetes resources needed to deploy your model. The most common resources include:

Deployment: Defines how the application should be deployed, including the number of replicas, container image, and resource limits.

Service: Exposes the deployment to the network, allowing external access.

ConfigMap and Secrets: Store configuration data and sensitive information, such as API keys or database credentials.

Here’s an example of a Kubernetes Deployment YAML file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ml-model-deployment

spec:

replicas: 3

selector:

matchLabels:

app: ml-model

template:

metadata:

labels:

app: ml-model

spec:

containers:

– name: ml-model-container

image: your-registry/ml-model:latest

ports:

– containerPort: 5000

resources:

limits:

cpu: “1”

memory: “1Gi”

requests:

cpu: “500m”

memory: “512Mi”

3. Deploy to Kubernetes

Once the Kubernetes resources are defined, use the kubectl command-line tool to deploy the application to your Kubernetes cluster:

kubectl apply -f deployment.yaml

4. Monitor and Scale

After deployment, monitor the performance of your ML model using Kubernetes’ built-in monitoring tools or third-party solutions like Prometheus and Grafana. Use Horizontal Pod Autoscaler (HPA) to automatically scale the number of replicas based on CPU or memory usage.

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: ml-model-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: ml-model-deployment

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

Industry Use Cases: Real-World Applications of Kubernetes for ML Deployment

Kubernetes has proven to be a game-changer for deploying machine learning models across various industries. Let’s explore some compelling use cases:

1. Financial Services

Fraud detection is a critical application of machine learning in the financial services industry. Banks and payment processors use ML models to analyze transaction data in real-time and identify suspicious activities. These models need to handle massive volumes of data and provide low-latency predictions to prevent fraud effectively.

How Kubernetes Helps:

- Kubernetes enables the deployment of fraud detection models at scale, ensuring high availability and fault tolerance.

- With auto-scaling, the system can handle sudden spikes in transaction volumes during peak hours.

- Kubernetes’ ability to manage GPU resources ensures that compute-intensive models run efficiently.

2. Healthcare

In healthcare, predictive analytics models are used to forecast patient outcomes, optimize treatment plans, and manage hospital resources. These models often require processing large datasets, including electronic health records (EHRs) and medical imaging data.

How Kubernetes Helps:

- Kubernetes allows healthcare organizations to deploy predictive analytics models across multiple hospitals or clinics, ensuring consistent performance.

- The platform’s self-healing capabilities ensure that critical healthcare applications remain operational even in the event of failures.

- Kubernetes’ support for GPU-accelerated workloads enables faster processing of medical imaging data.

3. E-commerce

E-commerce platforms rely on recommendation engines to personalize the shopping experience for customers. These engines analyze user behavior and product data in real-time to suggest relevant products, driving higher engagement and sales.

How Kubernetes Helps:

- Kubernetes enables the deployment of recommendation engines that can handle millions of user interactions simultaneously.

- The platform’s auto-scaling capabilities ensure that the system can handle traffic spikes during sales events or holidays.

- Kubernetes’ support for rolling updates allows e-commerce companies to continuously improve their recommendation algorithms without downtime.

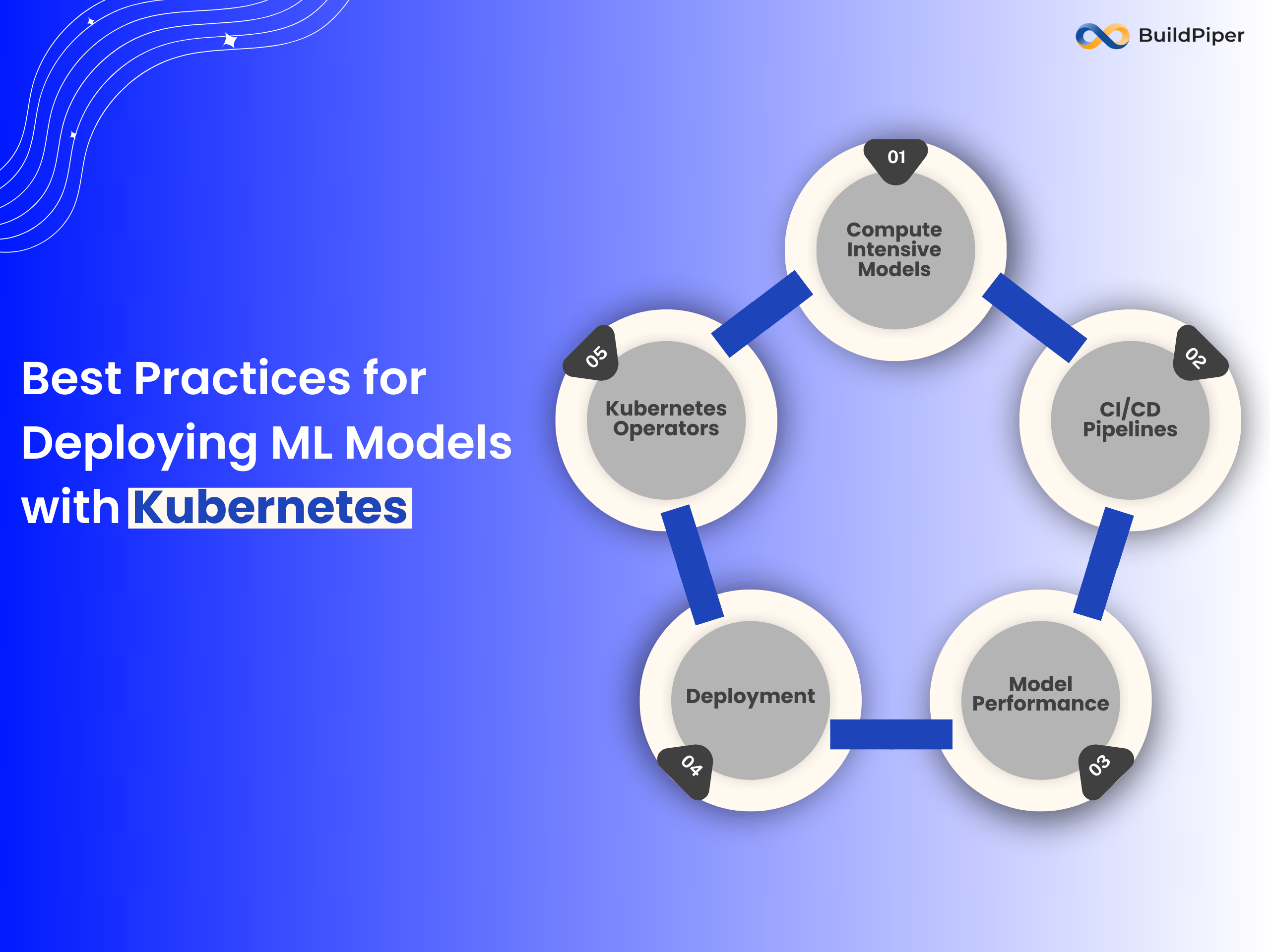

Best Practices for Deploying ML Models with Kubernetes

For efficient Enterprise ML Model Deployment with Kubernetes, implementing CI/CD pipelines and leveraging Kubernetes Operators like Kubeflow ensures streamlined model lifecycle management. Here are some best practices:

1. Compute-Intensive Models: If your ML model requires heavy computation, consider using GPU-enabled nodes in your Kubernetes cluster. Kubernetes supports GPU resource allocation, allowing you to leverage the power of GPUs for inference.

2. CI/CD Pipelines: Automate the deployment process using Continuous Integration and Continuous Deployment (CI/CD) pipelines. Tools like Jenkins, GitLab CI, or Argo CD can help streamline the build, test, and deployment process.

3. Model Performance: Continuously monitor the performance of your deployed models to detect issues like model drift or degradation in accuracy. Tools like MLflow or Kubeflow can help track model performance over time.

4. Deployment: Ensure that your Kubernetes cluster and ML deployment are secure. Use role-based access control (RBAC), network policies, and secrets management to protect sensitive data.

5. Kubernetes Operators: For complex ML workflows, consider using Kubernetes Operators like Kubeflow or Seldon Core. These operators provide custom resources and controllers specifically designed for managing ML workloads.

Conclusion

Enterprise ML Model Deployment with Kubernetes and Secure ML Model Deployment in Production are complex but critical tasks that require careful planning and execution. Kubernetes provides a powerful platform for managing the deployment, scaling, and monitoring of ML models, making it an ideal choice for organizations looking to operationalize their AI initiatives.

Whether you’re embarking on cloud-native machine learning deployment or scaling your ML workflows, Kubernetes offers the tools and features necessary for a seamless transition into production.