Introduction

Some of the top organizations in the world are using KEDA to operate production at a gigantic scale in the cloud based on practically any measure imaginable from almost any metric supplier.

The example given here is in Azure Kubernetes Service, but you can consider using it with Hardened clusters of the Kubernetes as well

In this article, I’ll explain what Kubernetes administrators can do using KEDA (Kubernetes Event-driven Autoscaling) and how to get started.

Here, you’ll also know how Kubernetes autoscaling works and much more!

Let’s Begin!

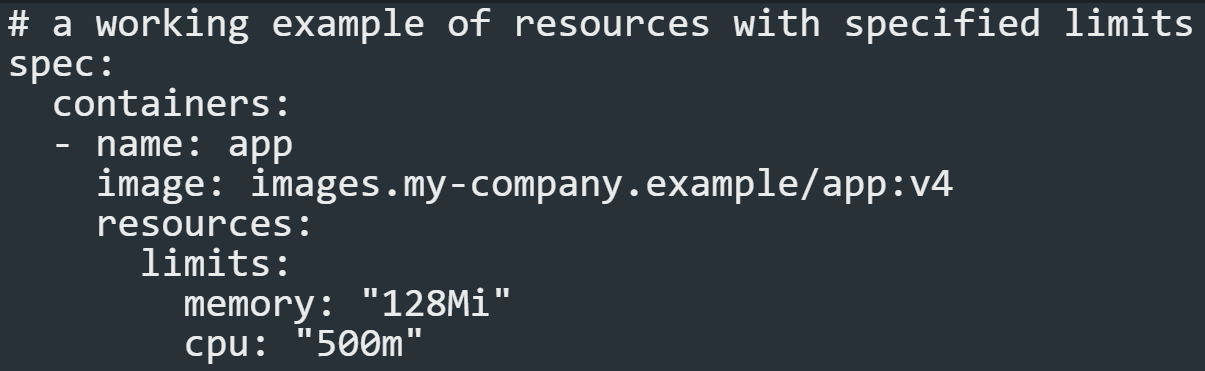

The Kubernetes Measurements Server, which is not deployed by default on some Kubernetes deployments, such as EKS on AWS, is used by KEDA to calculate basic CPU and memory metrics. Limits must also be included in the resources section of the applicable Kubernetes Pods (at a minimum).

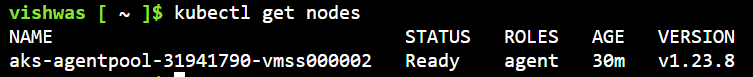

Prerequisite

- Kubernetes cluster version 1.5 or above is required.

- Ownership of the Kubernetes cluster’s administrator role -> change the setting in the RBAC with the Azure Active Directory or the IAM role while Kubernetes Cluster on AWS.

- Installing the Kubernetes Metrics Server is necessary. Depending on your Kubernetes provider, different setup procedures are required.

- Your Kubernetes Pod setup must have a resources section with set restrictions. See Pods and Containers Resource Management. The missing request for “cpu/memory” problem occurs if the resources section is empty (resources: or something similar).

What is KEDA?

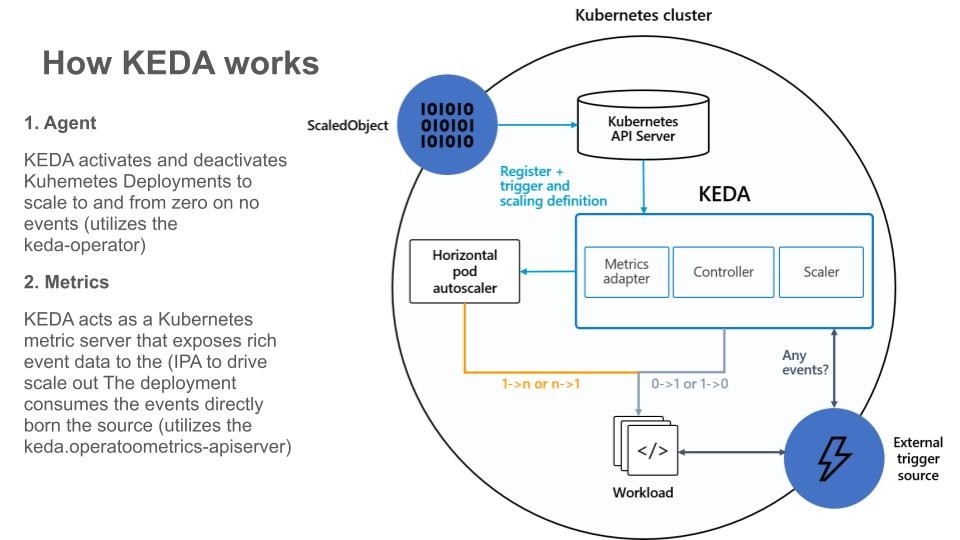

A similar autoscaling method to the built-in Kubernetes Horizontal Pod Autoscaler is called Kubernetes Event-driven Autoscaling (KEDA) (HPA). The HPA is still utilized by KEDA in order to do its magic.

You may access the official KEDA website here. Since KEDA offers excellent documentation, installation is simple. Microsoft is supporting the open-source Cloud Native project KEDA, and Azure AKS (Azure’s Kubernetes service) offers complete support for it. Large organizations like Microsoft, Zapier, Alibaba Cloud, and many more utilise it, therefore it is in use and being produced on a huge scale.

Why KEDA is a Game-Changer?

You can take a reference to the Real-time Application of KEDA in the Real Production

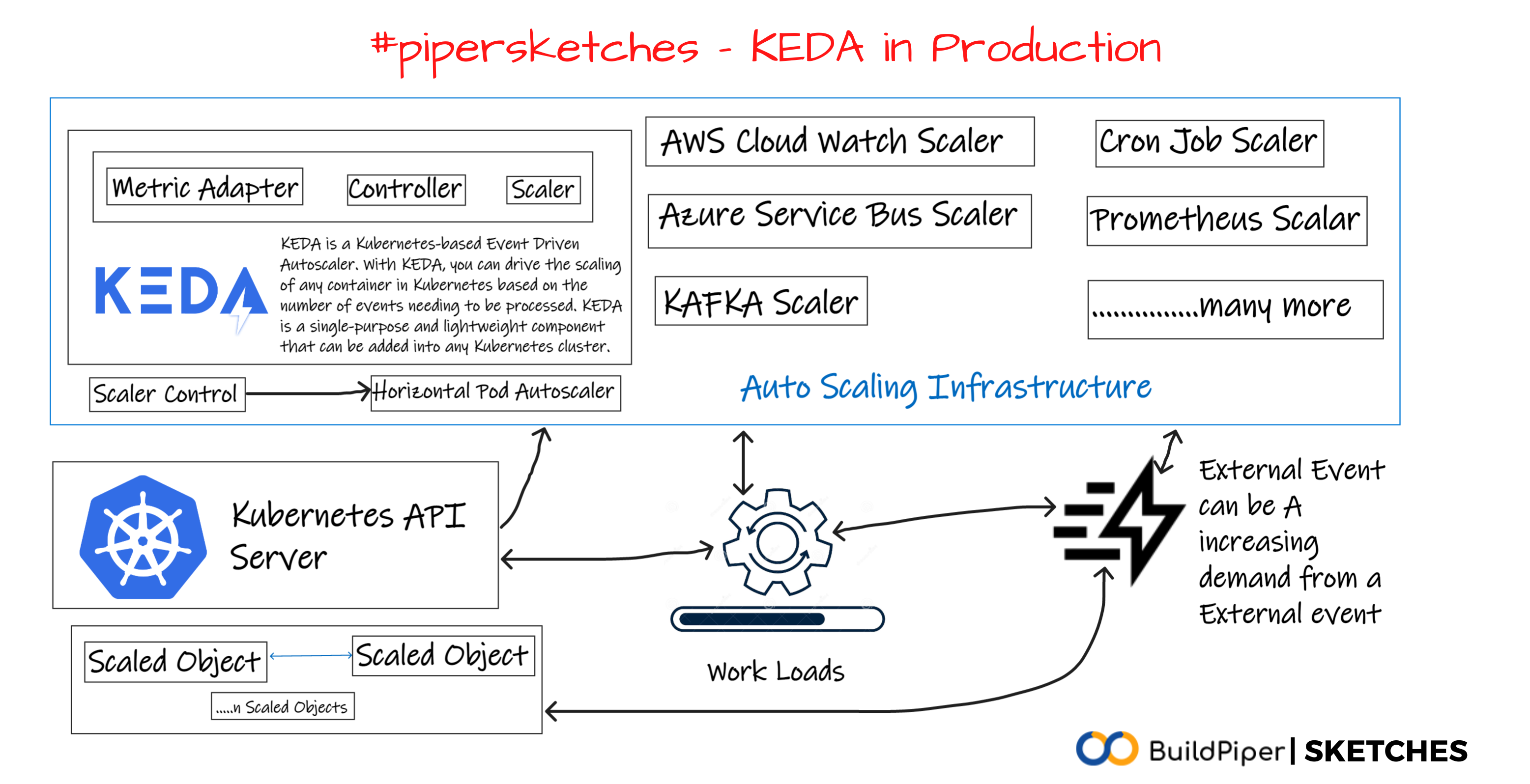

KEDA allows Kubernetes to scale pod replicas from zero to any number, based on metrics like queue depth of a message queue, requests per second, scheduled cron jobs, custom metrics from your own application logging, and pretty much any other metric you can think of. The built-in HPA in Kubernetes is unable to accomplish this with ease. The scaling service providers that this Kubernetes event-driven autoscaler (KEDA) supports are listed below.

How KEDA operates?

A glimpse of how Kubernetes Autoscaling works!

In order to grow according to the measure’s value, KEDA monitors metrics from an external metric provider system, such as Azure Monitor. It has direct communication with the system that provides metrics. It functions as a single-pod Kubernetes operator and continually monitors.

How to setup KEDA?

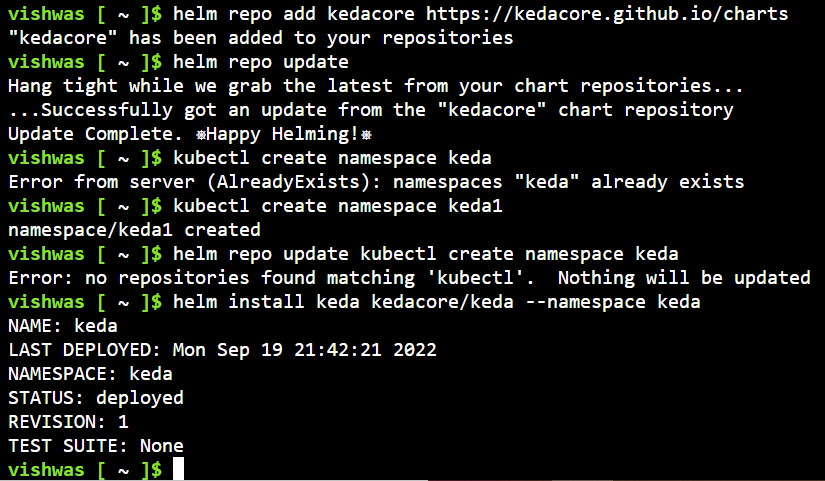

The easiest method of installing KEDA(Kubernetes Event-driven Autoscaling) is to use a Helm Chart

> helm repo add kedacore https://kedacore.github.io/charts > helm repo update > kubectl create namespace keda > helm install keda kedacore/keda --namespace keda

This is the installation that you need to do in the Azure CLI or the Azure Powershell

Setting up Scaling

You must create a manifest file to specify what to scale based on when to scale, and how to scale after this Kubernetes event-driven autoscalar i.e KEDA has been deployed and is operating in the cluster. I’ll offer advice on how to configure scaling depending on popular metrics like CPU and memory.

You can get a list of all supported scaling sources and kinds in the Documentation here.

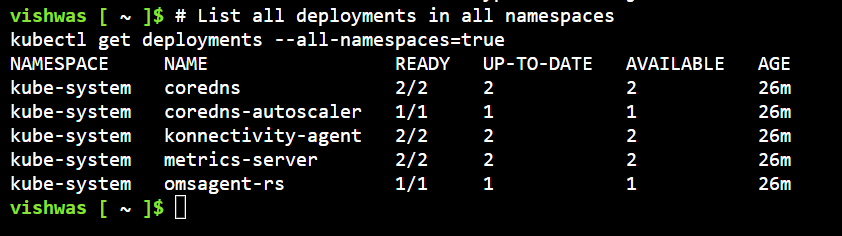

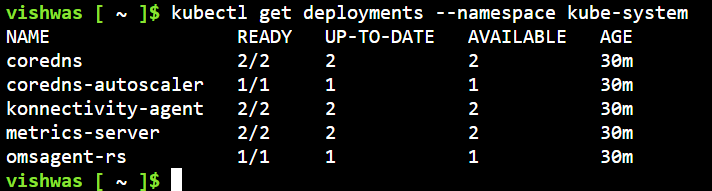

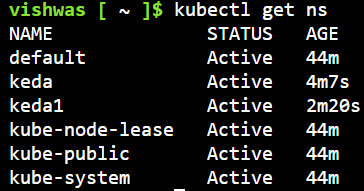

This is the list of the resources in the particular namespace

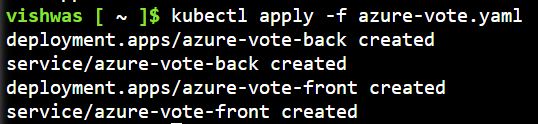

you can also try deploying a workload before like the one which is given with the Azure Kubernetes Quick starter

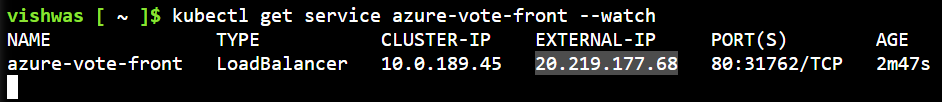

After deploying you will get an External IP:

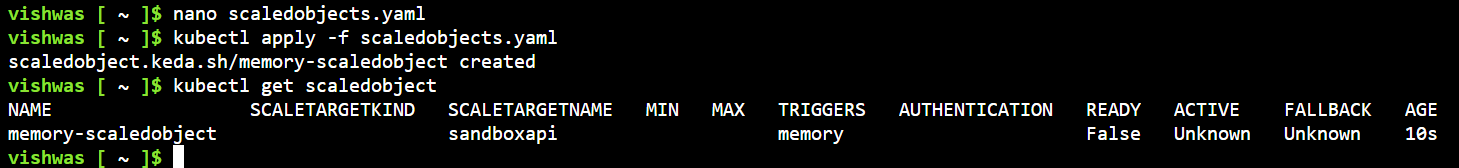

Similar to deployments, scaling is described as a manifest YAML file with a ScaledObject as the type.

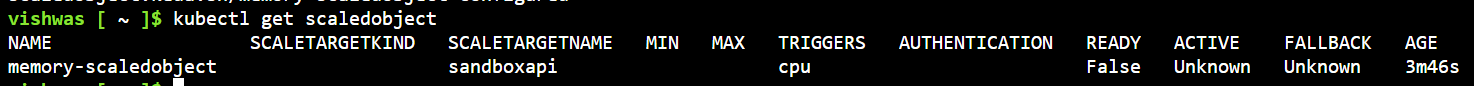

You can get the working of the existing scaled objects :

> kubectl get scaledobject

And a scaled object can be deleted with this command:

> kubectl delete scaledobject <name of scaled object>

you can also try commands like this to get the name space in the kubernetes:

> kubectl get ns

or

> Kubectl get namesapces

Let’s talk about scaling infrastructure (basic triggers)

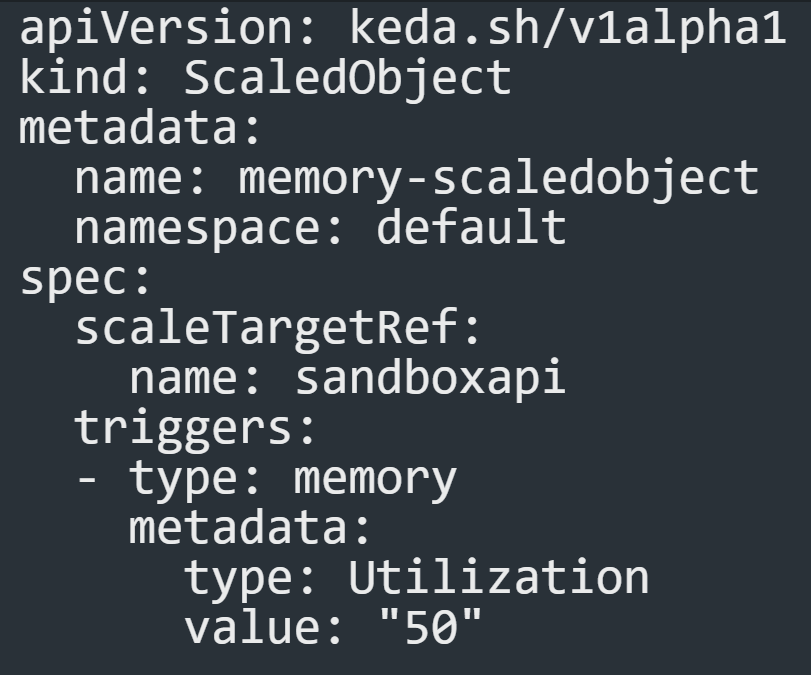

Memory Scaling

Based on the amount of memory used inside the pod’s container, a rule may be configured to scale.

- You can find the documentation on memory scaling here.

- you have to do all the helm installs as shown here.

- These kinds of values can serve as the basis for scaling:

- Utilization’s goal value, which is expressed as a percentage of the resource’s required value for the pods, is the resource metric’s average over all pertinent pods.

- The goal value for the metric AverageValue is the average of the measure over all pertinent pods (quantity).

Memory Utilization has been considered for the particular manifest example below:

This example develops a rule that scales pods in accordance with memory use.

The deployment that has to be scaled is referred to by TargetRef.

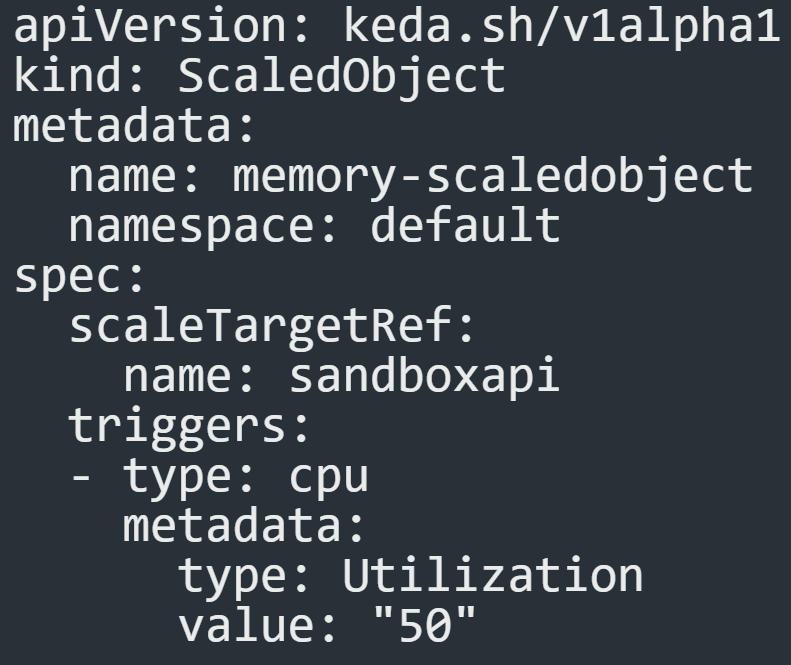

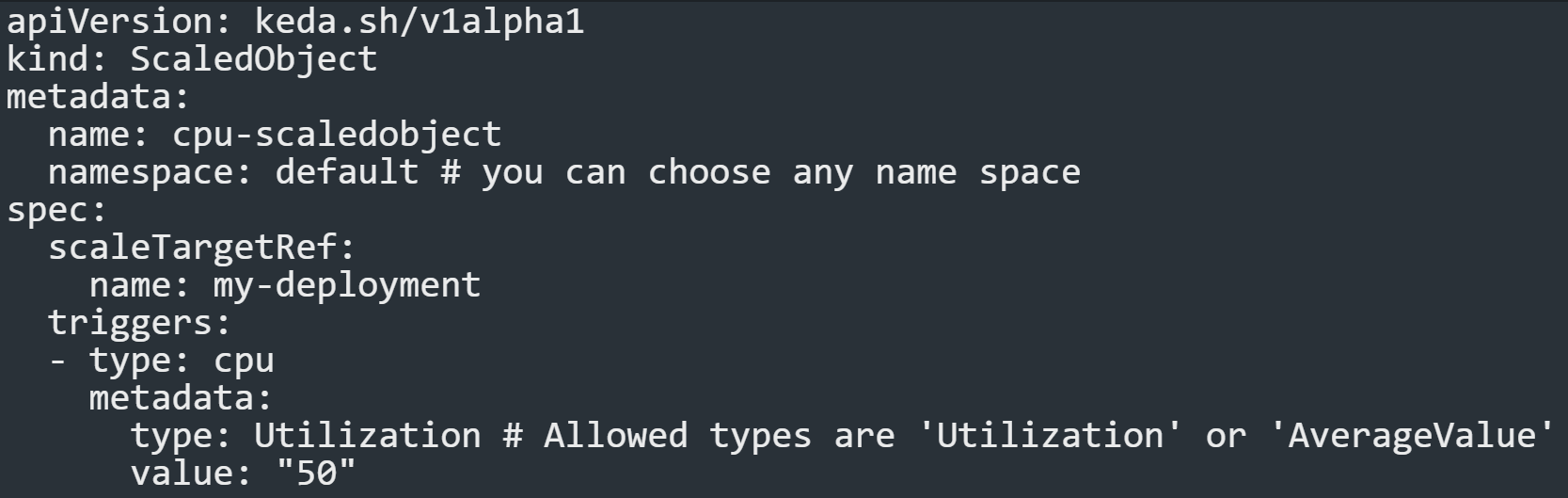

CPU Scaling

The CPU use of the container within the pod may also be taken into account while scaling.

- These kinds of values can serve as the basis for scaling:

- Utilization’s goal value, which is expressed as a percentage of the resource’s required value for the pods, is the resource metric’s average over all pertinent pods.

- The goal value for the metric AverageValue is the average of the measure over all pertinent pods (quantity).

CPU Utilization has been considered for the particular manifest example below:

This example develops a rule that scales pods in accordance with the level of CPU usage.

The deployment that has to be scaled is referred to by TargetRef .

For more reference you can see this yaml manifest: Here, I have done an explanation about the specifications from “spec”

This will give you an idea about the manifests and also the resource that are considered.

Trigger Information

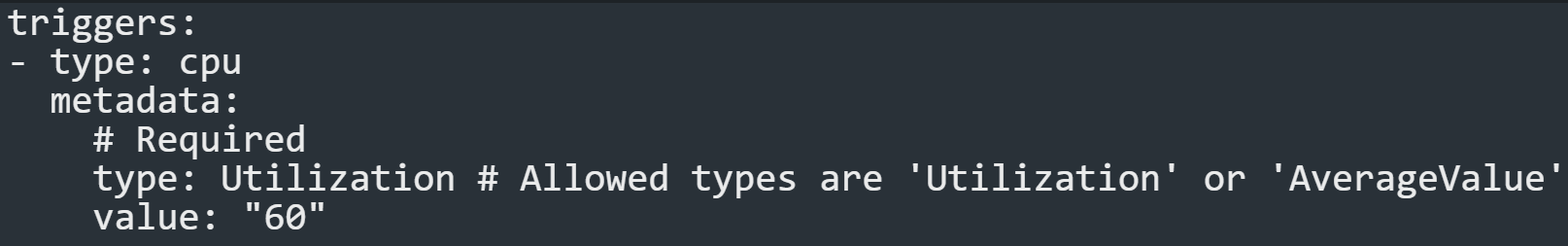

The CPU trigger those scales based on CPU metrics is described in this standard.

List of parameters

- type – The chosen kind of metric. Utilization or AverageValue are available options.

- value – Value for which scaling activities should be initiated:

- Utilization’s goal value, which is expressed as a percentage of the resource’s required value for the pods, is the average of the resource measure over all pertinent pods.

- The goal number when using AverageValue is the average of the statistic over all pertinent pods (quantity).

Example with the comments as explanation

Thus, it’s easy to get the utilization ready for the deployment but it’s very difficult to get the functions-based triggers in the world of Microservices.

There are still many more challenges to be addressed.

Conclusion

So, this is how Kubernetes autoscaling works. In this article, I discussed KEDA, how it can make it simple for you to create the most scalable apps for Kubernetes, and how to get started. There are several Kubernetes event-driven autoscalers available, so you may choose any to scale according to your precise needs.

Feel free to comment on this page if you have any queries.

BuildPiper, a popular Microservices monitoring tool as well as a DevOps tools for the Developers, consult our tech experts to discuss your critical business use cases and major security challenges. Schedule a demo today!